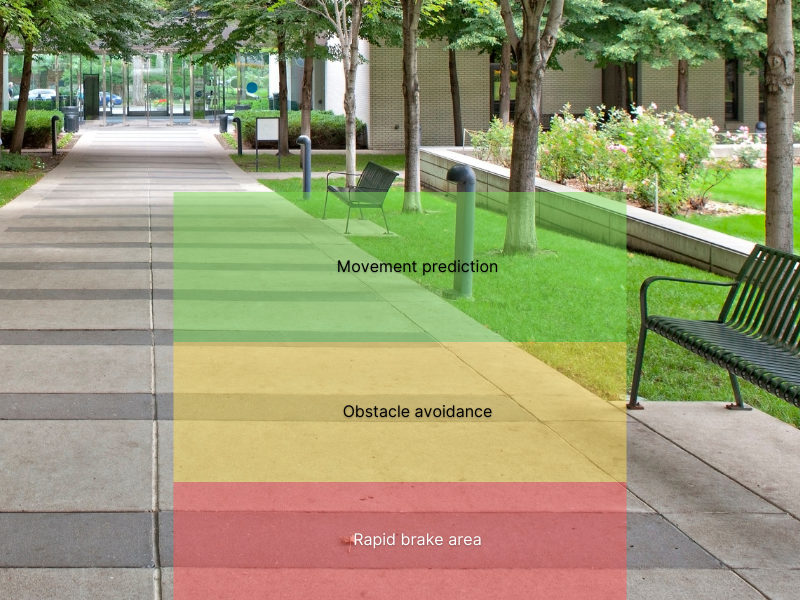

Autonomous mobile robots require a good understanding of the nearby environment to not collide with obstacles and realize their tasks with a high level of confidence.

Obstacle detection

In order not to collide with people and other autonomous machines, the robot has to monitor the close-range area for rapid obstacle avoidance and more distant areas of the environment to predict what can happen next.

Our software allows for efficient detection of stationary and moving nearby objects. And making the robot’s response dependent on their proximity and speed. The optimal conditions are always adjusted to the properties of the machine.

Motion analysis

A 3D environment filled with agents and moving objects needs to be analyzed in order to be aware of what can or already happened, predict future events, and act accordingly.

Motion analysis can monitor the state of the load carried by a robot, help avoid collisions and predict the motion of the visible objects.

In our technology, the motion also supports efficient data processing and deciding what has not changed and does not need to be processed in each frame.

Different types of optical flow techniques may be applied depending on the task and the computational capacity of the specific machine.

Object location and recognition

The ability to successfully recognize visible objects allows for completing tasks with a high rate of success. To find a rack where the package should be placed or an item that should be picked up.

Or to classify objects as stationary or non-stationary and decide whether or not it is safe to pass close by.

Object recognition is one of the most important tasks of Robotic Intelligence and there was so much space for improvement in this area – that is why we paid special attention to this subject and opened a 12-month R&D project just to optimize it.

Our technology adapts to the needs – we can set up the object recognition by analyzing not only visual (color) attributes, but also structural (depth) ones. In addition, if we want to recognize objects with low computational power, one of the possible scenarios is to do it only with edge data. Just like our brains can recognize objects, while seeing only their boundaries.

SLAM (Simultaneous Localization and Mapping)

Many autonomous robots are equipped with sensors that provide the opportunity of knowing the machine’s location (e.g. GPS). But this does not provide the all useful information.

It is also worth having the awareness of the machine’s orientation in the context of the environment. In such a case we can apply the SLAM (Simultaneous Localization and Mapping) technique, where we seek key visual features, which are characteristic of the specific scene.

This way the machine may be aware not only of its physical location but also much more confident about the direction it should drive now to realize the task.